Affective computing is the study and development of systems and devices with the ability to recognize, express, synthesize and model human emotions. It is an interdisciplinary field of research covering the fields of computer science, psychology, and cognitive science which involves studying the interaction between technology and feelings.

The field of affective computing seeks to give machines the ability to establish two-way communication, to be able to evaluate the emotion of a user and display an emotional reaction, for example using expressive avatars [1] [2]. Affective computing is therefore directly related to the field of Human-Machine Interaction.

Brief History of Affective Computing

Emotions perform many functions in humans, including a communication function when interacting with other people. Many areas are interested in emotion. Philosophy, history, ethnology, ethology, neuroscience, psychology, or the arts, for which emotion is a raw material.

In humans, empathy is the ability to share the emotions of another person and to understand the other person’s point of view [3]. Attempts to make artificial intelligence systems understand and react to their environment are in the cognitive domain. Attempts to objectively measure emotions in humans have their roots in the beginnings of experimental psychology of the nineteenth century [4].

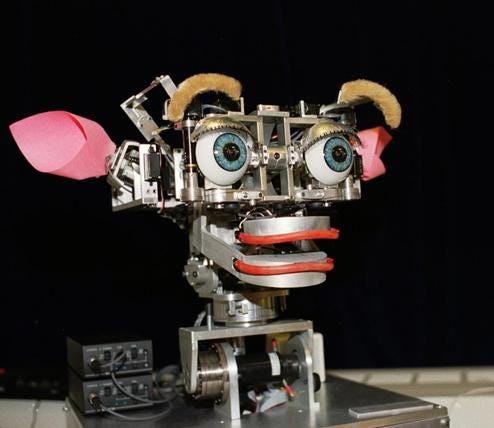

Computer scientists looked at emotion in the nineties. In 1995, Rosalind Picard, a computer scientist at the Massachusetts Institute of Technology (MIT), published an article that laid the foundation for this new discipline [5]. She develops the first social robot capable of detecting certain social and affective information: Kismet.

In the 2000s, computer prototypes showing empathy, sympathy and active listening skills were developed and tested. The purpose of these prototypes is to allow computers to manage the frustration of their users.

Recognition systems have over the years considered the different vectors of emotional communication, starting with the face and the voice, to then focus on the aesthetic and finally the physiological reactions of the emotion.

Emotion

The term “emotion” is a term commonly used, but it is difficult to define precisely. Intuitively, we feel that emotion is a phenomenon both physical and physiological. But what characterizes an emotion? Anger is unquestionably an emotion. What about love, stress, nervousness or hostility?

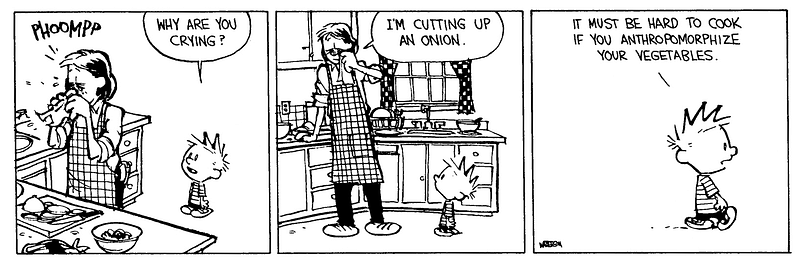

The concept of emotion is very familiar, we all have the ability to define it. However, this feeling is more complex than it seems. The intuitive definitions we can offer do not allow us to define the subject precisely. In addition, many ambiguities exist. In particular, on terms such as affect, mood or feeling. The links between these concepts and their limits are not obvious. Culture also plays an important role in the understanding of emotion. For example, “ijirashii” (い じ ら し い) is a Japanese term that defines the emotion felt when one sees a commendable person going through a difficulty. This is why we need to define precisely the type of emotion we are dealing with.

For this, we can base ourselves on studies carried out on the definitions of [6] we can define emotion as a response at the same time: bodily (neurological, physiological…) and cognitive (social, memory, knowledge…), fast, triggered by an external event (event, object) or interior (memory), which captures the attention of the person.

Emotions have a universal and innate character. They have an adaptive function. They’re most easily observed manifestations of the facial expressions. These are universally recognized and undoubtedly play an important role in social interactions. These terms have been studied in particular by Izard (1982), Ekman and Friesen (1977).

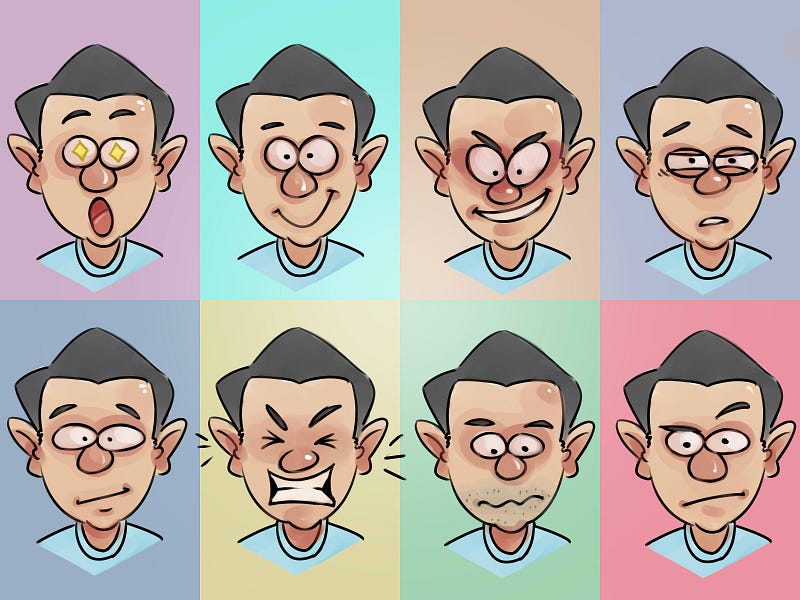

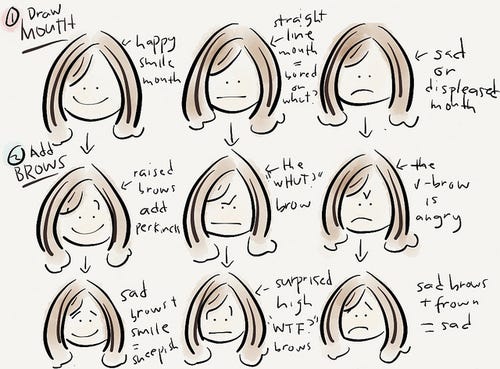

Ekman and Friesen (1977) listed all possible muscular contractions or action units in the face. Many research works show that the expression of the emotions of Animated Conversational Agents (ACA) helps improve human-machine interaction [7]. However, to ensure this positive effect, expressions of emotions must be appropriate to the events and social context of the interaction. Indeed, when the emotional expressions of the agent are inappropriate, it is perceived more negatively: less credible, less pleasant, etc. [8]. It is indeed the misunderstanding by the user of the expressions of the emotions of the agent which deteriorates the interaction.

For understandable emotional communication, it is therefore important that the agent’s emotions are both correctly expressed and expressed at the right moment during the interaction. To do this, an ACA must first have a rich directory of expressions. Existing virtual characters often use stereotyped expressions, very intense, limited to the face, giving them a very caricatural appearance and limiting their emotional communication ability to a few typical emotions (joy, anger, surprise, sadness, disgust, and fear). However, recognition of emotions is a necessary condition for a good understanding of the agent.

As shown [9], some emotions such as embarrassment or pride are difficult to perceive through facial expressions alone. To overcome these limitations, and to provide the ACAs the ability to communicate more subtle emotions such as relief or embarrassment, an algorithm allows the generation of signal sequences on different modalities (gestures, face, torso, etc.) for the expression of emotions has been developed. This model was constructed from manual annotations of videos of individuals, in which the relations between the different modalities during the expression of emotion were extracted [9].

Voice

The voice is a tool of communication, it allows us to get in touch with the other and to receive the other by his voice. The voice is also an emotional mode of expression, it makes it possible to transmit and share information and emotions with others. The study of the voice is thus of particular interest in the affective computing: indeed, the vocal behavior and the tone used play an important role in the interpretation by others in a given context, especially in the detection of sarcasm and irony. Knowing how to listen to the other person and to converse at the same vocal tone or its opposite is therefore important for the machine to understand, and discern the innuendo of his interlocutor.

We can distinguish 3 characteristic parameters in the voice:

- Intensity: The air, expelled from the lungs, goes up into the larynx, towards the vocal cords. The (variable) pressure of the air exerted under the vocal cords will define the intensity of the voice (which is measured in decibels). 50 to 60 dB for a conversation, up to 120 dB for operatic singing;

- Frequency: The air passes through the vocal cords, which (controlled by the brain) open and close. The number of openings/closings per second corresponds to the frequency of the voice (or tone or pitch), bass, treble or medium, which is measured in Hertz. A voice-mediate: 100 Hz for a man, 200 Hz for a woman;

- The stamp. The air then circulates in the resonators (throat, mouth, nasal cavity) and goes to take on the stamp. It can be nasal, warm, sensual, metallic, white, etc.

Facial Expression

Emotions have a universal and innate character. They have an adaptive function. They’re most easily observed manifestations are facial expressions. These are universally recognized and undoubtedly play an important role in social interactions. The face is an important source of information. Apart from the synchronization between speech and the animation of the face, the details of a face play an important role in transmitting emotion [10].

We distinguish mainly 2 information carried by the face: identity and expressions.

- Identity is determined almost entirely by the shape and position of the bones of the skull. These characteristics, unique for each individual, make it possible to distinguish it from others;

- Expressions are determined by the activation of the facial muscles. In addition, certain emotional processes can locally change the color of the skin, coloring it locally with a larger blood flow than usual.

The human face is characterized by a great expressive richness, far exceeding what is observed in other primates [11] [12]. This peculiarity, resulting from the differentiation of the human facial musculature, is clearly evident in the codification systems that have emerged over the past two decades. Thus, the Facial Action Coding System (FACS), developed by [13], distinguishes 46 action units that each corresponds to a distinct change in facial appearance. These 46 units of action can be produced separately or in the form of combinations of up to ten elements. The authors estimate that the number of combinations that can be produced by the human face would reach several thousand.

The combinations of facial expressions would reveal the nature of the emotion, its intensity, the degree of control it is subject to and certain peculiarities of the situation that provokes it. Although efforts to catalog facial expressions of emotions are still in their infancy, current evidence suggests that each of the fundamental emotions is expressed in several distinct configurations. Very interesting proposals in this regard have been made by [14]. The repertory that these authors propose contains nearly a hundred expressions.

According to psycho-evolutionist theories [15] [16], the expressiveness of the face is clearly used in emotional communication and in the regulation of social interactions. The facial expressions would allow the protagonists involved in an interaction to make an appreciation of the emotional state of the other and it would be partly on this appreciation that each protagonist would adjust his behavior. This system of regulation would be beneficial for the species because it would promote a reduction of conflicts and an increase of social cohesion [16]. The non-verbal behavior of the face accompanying the speech, such as, for example, a slight nod or a quick lifting of the eyebrows, increases the comprehensibility of speech [17], and especially to reflect the emotion of the speaker. Although research has confirmed that non-verbal behavior is strongly related to synchronized speech [17].

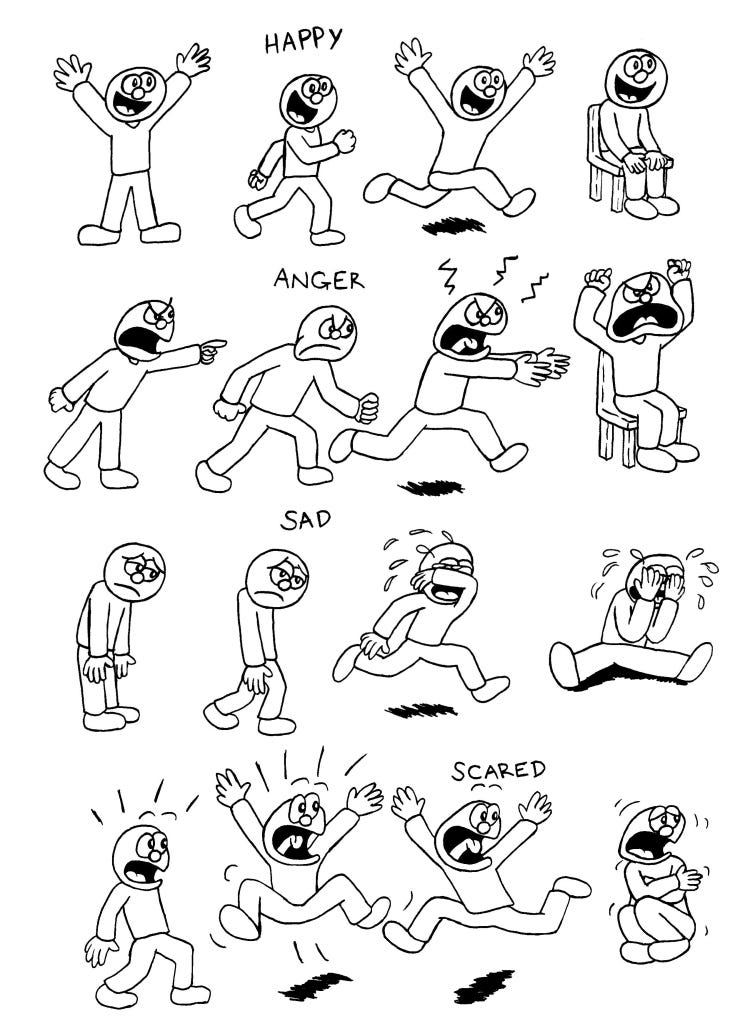

Body Language

Non-verbal communication or body language refers to any conversation that does not use speech. It is not based on words, but on gestures, actions, and reactions, attitudes, facial expressions, including micro-expressions, as well as other signals, conscious or unconscious. The whole body conveys a message as effective as the words one utters. In addition, interlocutors react unconsciously to mutual non-verbal messages. Non-verbal communication, thus adds an extra dimension to the message, sometimes in contradiction to it. This is why it is interesting, for human-machine communication, that machines can easily identify body expressions and react accordingly.

Many studies have shown the importance of non-verbal behavior in communication. More than half of the information in an interpersonal interaction is indeed expressed through non-verbal behavior [18]. In a human-machine interaction, it is therefore essential that the virtual agents are capable of producing communicative expressive gestures.

However, the recognition of body expressions has its limits. Indeed, although there are similarities, body expressions do not always have the same meaning across cultures. Moreover, body language is not an explicitly learned language, but rather implicitly by internalization and imitation. There is therefore no clearly defined interpretation of body language.

Physiological Indicators

The physiological activity of an individual is closely related to his emotional states. The autonomic nervous system, including sympathetic and parasympathetic branches, controls different physiological responses that can be measured by simple techniques. For example, changes in heart rate, blood pressure, body temperature, electroencephalographic rhythms, cutaneous conductance, may occur as a result of emotionally charged events.

Empathy

Empathy comes from the Greek word “empatheia”, em means “in” and pathos means “feeling”. This concept refers to an individual’s ability to understand (cognitive empathy), to feel (emotional empathy) what another person is experiencing and to act accordingly (compassionate empathy), by the detection and interpretation of the feelings and thoughts of a third person [19].

Cognitive Empathy

According to an old proverb, “Walk a mile in someone’s shoes and you will understand” seems to describe empathy for many, but it is almost exclusively cognitive empathy. Cognition is thinking or knowing. It is the process of evaluating a situation and knowing what another person is going through because it is possible to grasp the situation with our own knowledge.

It is a form of empathy because it involves projecting our knowledge into a similar type of situation and acknowledging to the other person that we understand their experience because we have, for example, experienced a similar situation. Unfortunately, this type of empathic response is often used by people to manipulate others rather than to make them compassionate. Showing this type of empathy can be positive or negative.

Emotional Empathy

The next level in the definition of empathy, emotional empathy, includes not only knowing what is happening to someone else but also the ability to feel what they are feeling. Having an emotional reaction when you are made aware of another person’s emotional pain causes emotional contagion. In other words, our reaction to their fate causes a contagious emotional response. People feel it, according to psychologists such as Daniel Goleman in his book “Social Intelligence”, because of the mirror neuron system. This system allows humans and some mammals to reflect what they see or see in the other. It can be postures or emotions.

Compassionate Empathy

It is a deeper answer than simply knowing or feeling; it is this type of empathy that drives a person to act because of what another person is going through. Compassionate empathy is what we mean by compassion: feeling the pain of someone and acting accordingly. Like sympathy, it is about feeling pain and worry for someone, but with an action to alleviate the problem. It causes people to act when they see another person in distress.

Emotion Recognition

Emotion recognition is a young field, but growing maturity implies new needs in terms of modeling and integration into existing models. It consists of associating an emotion with a facial image, a sound or physiological data. The goal is to determine, according to this modality, the internal emotional state of the person.

Emotion Recognition by Voice Modality

Speech recognition is a computer technique that allows the human voice picked up by means of a microphone to be read into the machine-readable text. Nowadays, deep learning with speech recognition can achieve relatively low error rates, 5.5% for IBM speech recognition, while the human at a 5.1% error rate.

However, voice recognition has its limitations. Indeed, being easily usurped, it cannot be used as a means of authentication, for example. Also, different accents within a language can be problematic for understanding.

Nonetheless, these techniques used for speech recognition can be applied to the understanding of emotions. That is, focus on how a sentence is pronounced rather than only on its content. Shiqing Zhang in [20] proposes a new method of recognizing emotions in a speech signal using Fuzzy Least Squares Vector Support Machines (FLSSVM) based on the extraction of prosody characteristics and vocal quality from an emotional speech.

Recognition of Emotion From Facial Expressions

Facial expression recognition seems to be the most natural and easy biometric technology to put into practice. Indeed, facial expressions are naturally used to send emotional messages between individuals. This is why it is interesting, for man-machine communication, that the machines can easily recognize and understand facial expressions and react accordingly. Facial recognition software is able to identify individuals according to the morphology of their face from an image or a photo sensor. The effectiveness of facial recognition depends on three key factors:

- The quality of the image;

- The identification algorithm;

- The reliability of the database.

These same techniques can be applied to the recognition of facial expressions. In the literature several techniques have been proposed to easily recognize the expressions: [21], [22], [23], [24], [25], [26], [27], [28], [29] proposes a multimodal approach for the recognition of the eight emotions. Their approach integrates information from facial expressions, body movements and gestures, and speech. [30]

Recognition of Emotions from Body Language

Darwin [31] was the first to propose several principles describing in detail how body language and posture are associated with emotions in humans and animals. Although Darwin’s research on emotion analysis has focused on body language and posture [31], [32], [33], existing emotion detection systems overlook the importance of body posture over facial expressions and speech recognition. [34] also suggests that the information conveyed by body language has not been sufficiently explored.

In addition, body posture can provide information that sometimes cannot be transmitted by conventional non-verbal channels such as facial expressions and speech. The greatest benefit of recognizing emotions from bodily information is that they are unconscious, unintentional, and therefore not susceptible to the environment compared to facial expressions and speech.

[35] [36] [37] [38] in their studies of deception show that it is much easier to conceal deception by the expression of the face than the channel of the body posture which is less controllable. [35] [36] [39] and [40] offer a real-time approach to automatically recognize emotions from body movements.

Recognition of Emotions from Physiological Indicators

It has been shown that physiological changes play an important role in emotional experiences [41]. Emotion is a psychophysiological process, produced by the activity of the limbic system in response to a stimulus, leading to activation of the somatosensory system [42]. In addition, various peripheral physiological changes cause different emotions and body feedback is necessary for the emergence of emotion [41].

In [43], [44], [45], the authors use electrocardiography (ECG) to classify the emotions of a given person and in [46] electroencephalography (EEG). In [47], the authors use several physiological characteristics such as cutaneous temperature, photoplethysmography, electrodermal activity, and electrocardiogram.

Model of Emotion

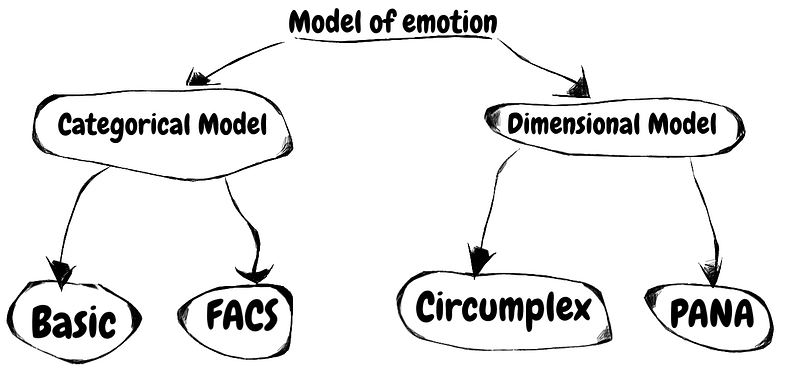

During long years of research on emotions, researchers have defined many systems for classifying these phenomena. Whatever the theory, we usually find two classifications of emotions [34], the categorical or discrete approach and the dimensional approach.

In the categorical approach, the emotional processes can be explained by a set of basic or fundamental emotions, which would be innate and common to the whole human species. There is no consensus about the number and nature of these so-called fundamental emotions. The model of the most used categorical approach is FACS.

The dimensional approach differs from the categorical approach in that emotion results from a fixed number of concepts represented in a multidimensional space [34]. Dimensions can be an axis of pleasure, arousal, power. These dimensions vary according to the needs of the model. The most widely used model is Russell with the valence and activation (or valence-arousal) dimensions represented by Russell’s circumplex.

Categorical Model of Emotion

Basic Model of Emotion

In the theory of discrete emotions, it is thought that all humans have an innate set of recognizable basic emotions between different cultures. These basic emotions are called “discrete” because they are thought to be distinguished by the facial expression and biological processes of an individual [48]. According to Paul Ekman [49], [50] they concluded that the six basic emotions are anger, disgust, fear, happiness, sadness, and surprise.

Ekman explains that each of these emotions has particular characteristics, allowing them to express themselves in varying degrees. Each emotion acts as a discrete category rather than as an individual emotional state.

This categorical approach offers a certain advantage in computer science. Indeed, to recognize an emotion of a discrete set is to choose a category among those proposed in the set; and the categorization of signals is a problem largely studied in computer science, which has adequate tools for its resolution.

Discrete models of emotions, by their very nature, are particularly suited to the recognition of emotions by the computer. They are therefore widely used in the field of emotion recognition [51].

Facial Action Coding System

FACS (Facial Action Coding System) developed by Ekman and Friesen [52] is a comprehensive description of facial movements. It involves associating a code with each muscular activation of the face that can be distinguished visually. These atomic elements are called Action Units (AU). The FACS encoder manual thus contains the visual description of the changes in the face at the occurrence of each AU or each combination of AU. In addition, each AU can be displayed with a different amplitude. The authors selected a maximum of 5 amplitudes for each AU.

An Action Unit does not necessarily correspond to an isolated facial muscle. Indeed, the muscular structure of the face makes that the activation of a muscle causes the displacement of its neighbors in many cases, this because the muscles are attached more to each other than to the muscles of the skull.

A long study is necessary to obtain a level of expertise necessary for the coding of facial movements. Moreover, even for an expert, the analysis requires a very long and tedious work.

46 Units are distinguished for the description of human facial expressions. However, Scherer et al. in [51] observed about 7000 different combinations of action units when producing spontaneous expressions.

Moreover, the combination of several facial movements cannot be easily described by the visual combination of each of the isolated movements. Indeed, a phenomenon of coarticulation occurs, in the same way, that the pronunciation of a word cannot be reduced to the concatenation of the pronunciation of each of its phonemes.

Une méthode de description des mouvements du visage a été développée par les psychologues Paul Ekman et Wallace Friesen en 1978 [13] [14], [53]. C’est devenu le principal outil de description utilisé dans les études s’intéressant à l’expression faciale, par exemple dans le domaine des sciences affectives. Cette nomenclature s’inspire elle-même des travaux de l’anatomiste suédois Carl-Herman Hjortsjö [54] publiés dans son ouvrage sur l’imitation faciale.

Individual facial muscle movements are FACS-coded from slight, instantaneous changes in facial appearance [55]. The systematic categorization of the physical expression of emotions is a common standard that has proved useful to psychologists and facilitators. Because of subjectivity and time-consuming issues, FACS has been established as a computer-based automated system that detects faces in videos, extracts geometric features of faces, and generates temporal profiles of each facial movement [55]. The pioneering FM 3.0 Facial Coding System (FM FACS 3.0) [56] was created in 2018 by Dr. Freitas-Magalhães and presents 5,000 segments in 4K, using 3D technology and real-time automatic recognition. (FaceReader 7.1). FM FACS 3.0 includes 8 pioneering action units (AU) and 22 pioneering languages (TM) movements, in addition to functional and structural nomenclature [57].

In the FACS system, facial contractions are broken down into action units. The FACS system is based on the description of 46 Action Units identified by a number in the FACS nomenclature.

Dimensional Model of Emotion

Dimensional models of emotion attempt to conceptualize human emotions by defining where they are in two or three dimensions. Most dimensional models incorporate valence and excitation or intensity dimensions.

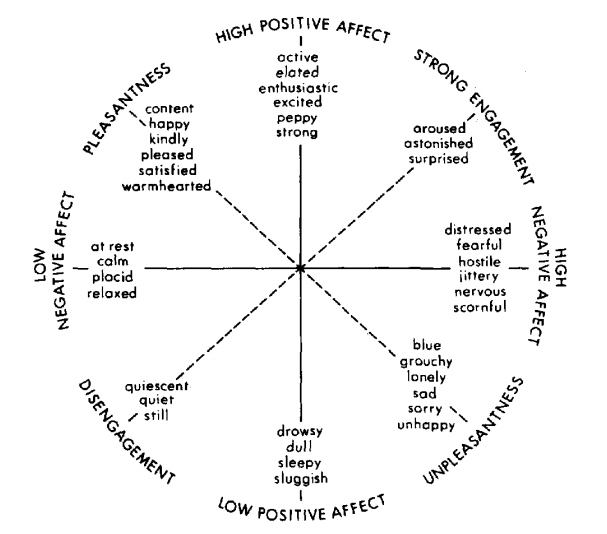

Several dimensional models of emotion have been developed, although only a few remain the dominant models currently accepted by most [58]. The most important two-dimensional models are the circumplex model, the vector model, and the Positive Activation Negative Activation (PANA) model.

Circumplex Model of Emotion

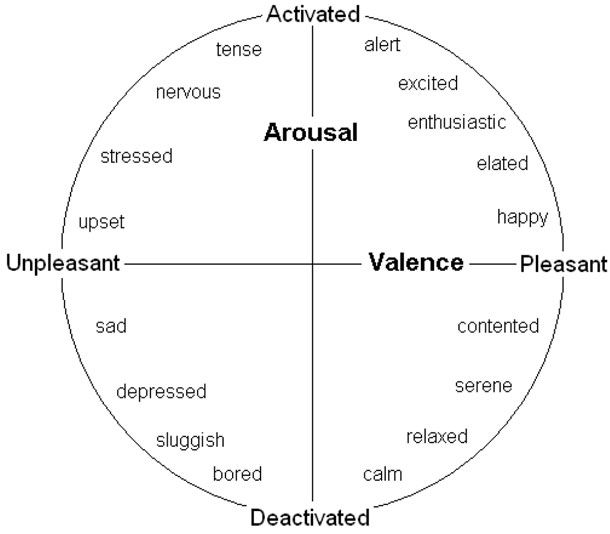

The circumplex model of emotion was developed by James Russell. [59] This model suggests that emotions are distributed in a two-dimensional circular space, containing dimensions of arousal and valence. Arousal represents the vertical axis and valence, the horizontal axis, while the center of the circle represents a neutral valence and an average arousal level. [58]

In this model, emotional states can be represented at any level of valence and arousal, or at a neutral level of one or both of these factors. Circumplex models were used most often to test emotional word stimuli, emotional facial expressions, and emotional states [60].

The term valence is used to refer to the inherently pleasant or unpleasant quality of a stimulus or situation. In the context of the psychology of emotions, the concept of valence also refers to the pleasantness of certain emotional states such as joy or contemplation of the beautiful, which is attributed to a positive valence as opposed to negatively valent emotions such as fear or sadness generally associated with malaise, an inconvenience or suffering.

Arousal or Physiological activation or cerebral activation refers to the psycho-physiological state corresponding to the activation of the Brainstem retrained reticular activator formation (FRA) involving the autonomic nervous system (increased heart rate and increased blood pressure) and the endocrine system ( cortisol production). This activation is manifested, in behavioral terms, by a state of alert, a greater perceptive sensitivity, a greater speed of cognitive treatment and faster motor responses.

Vector Model of Emotion

The vector model of emotion appeared in 1992 [61]. This two-dimensional model consists of vectors that point in two directions, representing a form of “boomerang”. The model assumes that there is always an underlying awakening dimension and that valence determines the direction in which a particular emotion is situated.

For example, a positive valence would move the emotion up the vector and a negative valence would move the emotion down the vector [58]. In this model, the states of high arousal are differentiated by their valence, while the states of low arousal are more neutral and are represented near the meeting point of the vectors. Vector models have been most widely used to test the stimuli of words and images [60].

Consensus Model of Emotion

The positive activation — negative activation (PANA) or “consensus” model, originally created by Watson and Tellegen in 1985 [62], suggests that positive affect and negative affect are two distinct systems. As in the vector model, states of higher awakening tend to be defined by their valency and lower waking states tend to be more neutral in terms of valence [58]. In the PANA model, the vertical axis represents the “lowest” to “high” positive affect and the horizontal axis represents the “lowest” to “high” negative affect. The dimensions of the valence and the excitation were on a rotation of 45 degrees on these axes. [62]

Conclusion

Emotions are mediating in human relationships and are intrinsically linked with intelligence. It impacts many areas such as: learning, memory, problem solving, humor, perception and health. Emotions are at the heart of the human experience. Before technology, even before the creation of languages, our emotions played a crucial role in communication, social connections and decision-making. Today, emotions remain at the heart of our identity and our way of communicating. As a result, this is our most natural way of interacting with the world and has also led us to put in place ways to connect emotions to our machines. The field of affective computing tends to apprehend this observation by applying artificial emotional intelligence to facilitate human-machine interaction. The different techniques in artificial emotional intelligence can also be applied to help people with Alzheimer’s disease, autism, care of the elderly …

References

- G. Castellano, Movement expressivity analysis in affective computers: from recognition to expression of emotion, Genova: University of Genova, 2008

- S. a. P. C. G. Pasquariello, «A simple facial animation engine,» chez In 6th Online World Conference on Soft Computing in Industrial Applications, 2001.

- M. W. Eysenck, Psychology, a student handbook, Hove: Psychology Press, 2000.4.

- J. T. e. T. Tan, «Affective Computing and Intelligent Interaction,» Computer Science, p. 981–995, 2005.5.

- R. Picard., Affective computing, Massachusetts: MIT press, 1997.6.

- J. a. A. M. K. Paul R. Kleinginna, Categorized List of Emotion Definitions, with Suggestions for a Consensual Definition, 1996.7.

- R. D. B. &. N. K. Russell, «Super-recognizers: People with extraordinary face recognition ability,» Psychonomic Bulletin & Review, p. 252, 2009.8.

- R. N. a. C. P. M. Ochs, «How a virtual agent should smile? Morphological and dynamic characteristics of virtual agent’s smiles,» chez International Conference on Intelligent Virtual Agents, 2010.9.

- M. O. E. B. K. P. Q. A. L. Y. D. J. H. Radoslaw Niewiadomski, La compréhension machine à travers l’expression non-verbale, Paris: Greta Team, 2011.10.

- N. T. C. C. J.-C. M. Mathieu Courgeon, «Postural Expressions of Action Tendency,» Nonverbal Behaviour, 2009.11.

- C. -. Skolnikoff, «Facial expression of emotion in nonhuman primates,» Nonverbal Behaviour, 1973.12.

- Redican, «Nonverbal communication in human interaction,» Nonverbal Behaviour, 1982.13.

- E. e. Friesen, «A New Pan-Cultural Facial Expression of Emotion,» Nonverbal Behaviour, pp. 159–168, 1978.14.

- E. e. Friesen, «A New Pan-Cultural Facial Expression of Emotion,» Nonverbal Behaviour, pp. 159–168, 1978.15.

- Ekman, «Felt, false and miserable smiles,» Nonverbal Behaviour, pp. 238–252, 1982.16, 17.

- Izard, Emotions, personality, and psychotherapy, New York: Plenum Press, 1991.18.

- J. A. J. D. E. C. T. K. V.-B. K. G. Munhall, Visual Prosody and Speech Intelligibility, British Columbia: Queen’s University, Kingston, Ontario, 2004.19.

- Mehrabian, Nonverbal concomitants of perceived and intended persuasiveness., Los Angeles, 1969.21.

- Y. &. L. C. Lussier, Rapport de recherche soumis aux Centres de traitement pour hommes à comportements violent, Québec: Université du Québec à Trois-Rivières, 2002.22.

- S. Zhan, «Speech emotion recognition based on Fuzzy least squares support vector machines,» chez 7th world congress on intelligent control and automation, Chongqing, 2008.23.

- Y. Tang, Deep learning using linear support vector machines, arXiv, 2013.24.

- K. B. a. G. W. T. T. Devries, «Multi-task learning of facial landmarks and expression,» Computer and Robot Vision, p. 98–103, 2014.25.

- P. L. C. C. L. a. X. T. Z. Zhang, «Learning social relation traits from face images,» chez International Conference on Computer Vision, 2015.26.

- D. T. J. Y. H. X. Y. L. a. D. T. Y. Guo, «Deep neural networks with relativity learning for facial expression recognition,» Multimedia & Expo Workshops (ICMEW), 2016.27.

- S.-Y. D. J. R. G. K. a. S.-Y. L. B.-K. Kim, «Fusing aligned and non-aligned face information for automatic affect recognition in the wild: A deep learning approach,» chez Conference on Computer Vision and Pattern Recognition Workshops, 2016.28.

- C. P. a. M. Kampel, «Facial expression recognition using convolutional neural networks: State of the art,» arXiv, 2016.29.

- M. A.-S. W. P. C. a. M. G. T. Connie, «Facial expression recognition using a hybrid cnn–sift aggregator,» International Workshop on Multi-disciplinary Trends in Artificial Intelligence, p.139–149, 2017.30.

- G. K. L. &. C. G. Castellano, «Emotion Recognition through Multiple Modalities: Face, Body Gesture, Speech,» Computer Science, 2016.31.

- M. A.-S. W. P. C. a. M. G. Connie T., «Facial expression recognition using a hybrid cnn-sift aggregator,» chez International Workshop on multi-disciplinary trends in Artificial Intelligence, 2017.32.

- H. A. Simon, Model of a man social and rational, mathematical essays on rational human behavior in society setting, New York: Wiley, 2004.33.

- C. Darwin, The Expression of the Emotions in Man and Animals, NY: Oxford University Press, 1872.34.

- M. Argyle, Bodily communication, London: Methuen, 1975.36.

- a. B. D. G. N. Hadjikhani, «Seeing fearful body expressions activates the fusiform cortex and amygdale,» Current Biology, pp. 2201–2205, 2003.37.

- Q. &. J. E. Liu, «Muscle memory,» The Journal of Physiology, p. 775–776, 2011.38, 42.

- M. Coulson, «Attributing emotion to static body postures: Recognition accuracy, confusions, and viewpoint dependence,» Nonverbal Behaviour, 2004.39.

- E. K. D. Z. a. M. A. J. Montepare, «The use of body movements and gesture as cues to emotions in younger and older adults,» Nonverbal Behaviour, pp. 133–152, 1999.40.

- a. K. W. R. Walk, «Perception of the smile and other emotions of the body and face at different distances,» Psychonomic Soc, p. 510, 1988.41.

- E. e. Friesen, «Nonverbal behaviour in psychotherapy research,» Research in Psychotherapy, pp. 179–216, 1968.43.

- A. S. F. O. A. V. A. C. Stefano Piana, «Real-time Automatic Emotion Recognition from Body Gestures».45.

- S. D. S. K. A. &. J. R. Saha, «A study on emotion recognition from body gestures using Kinect sensor,» chez International Conference on Communication and Signal Processing, 2014.46, 48.

- Rivière, A., and Godet, B., 2003, L’affective computing: rôle adaptatif des émotions dans l’interaction homme–machine. Report, Charles de Gaulle University, Lille, France. pp. 9–12, 33–3847.

- Lalanne, C., 2005, La cognition: l’approche des Neurosciences Cognitives. Report, Département d’informatique, René Descartes University, Paris, France. pp. 26–28.49.

- J. W. W. C. a. C. H. Y. Hsu, «Automatic ECG-Based Emotion Recognition in Music Listening,» chez International Conference on Computational Intelligence and Natural Computing, Wuhan, 2009.50.

- Y. X. a. G. Liu, «A Method of Emotion Recognition Based on ECG Signal,» chez International Conference on Computational Intelligence and Natural Computing, Wuhan, 2009.51.

- L. G. a. C. J. C. Defu, «Toward Recognizing Two Emotion States from ECG Signals,» chez International Conference on Computational Intelligence and Natural Computing, Wuhan, 2009.52.

- B. A. J. S.-M. A. M. A. Unsupervised Learning in Reservoir ComputingRahma Fourati,

«Unsupervised Learning in Reservoir Computing for EEG-based Emotion Recognition,» 2018.53. - E. J. S. K. C. H. a. J. S. B. Park, «Seven emotion recognition by means of particle swarm optimization on physiological signals: Seven emotion recognition,» chez International Conference on Networking, Sensing and Control, Beijing, 2012.

- G. Colombetti, «From affect programs to dynamical discrete emotions,» Philosophical Psychology, p. 407–425, 2009.

- P. Ekman, «An Argument for Basic Emotions,» Cognition and Emotion, p. 169–200, 1992.

- Ekman, «Behavioral markers and recognizability of the Smile of Enjoyment,» Personality and Social Psychology, 1993.

- K. Scherer, «Psychological models of emotion,» The neuropsychology of emotion, p. 137–162, 2000.

- P. E. a. W. Friesen, Facial Action Coding System: A Technique for the Measurement of Facial Movement, Palo Alto: Consulting Psychologists Press, 1978.

- W. V. F. a. J. C. H. Paul Ekman, Facial Action Coding System, Salt Lake City: A Human Face, 2002.

- C. Hjorztsjö, Man’s face and mimic language, 1969.

- J. Hamm, C. G. Kohler, R. C. Gur et R. Verma, «Automated Facial Action Coding System for dynamic analysis of facial expressions in neuropsychiatric disorders,» Journal of Neuroscience Methods, p. 237–256, 2011

- A. Freitas-Magalhães, Scientific measurement of the human face:F-M FACS 3.0 — pioneer and revolutionary, Porto: FEELab Science Books, 2018

- A. Freitas-Magalhães, Facial Action Coding System 3.0: Manual of Scientific Codification of the Human Face, Porto: FEELab Science Books, 2018.

- D. C. Rubin et J. Talerico, A comparison of dimensional models of emotion, 2009.

- J. Russell, «A circumplex model of affect,» Journal of Personality and Social Psychology, p. 1161–

1178, 1980. - N. A. Remington, L. R. Fabrigar et P. S. Visser, «Re-examining the circumplex model of affect,» Journal of Personality and Social Psychology, p. 286–300, 2000.

- M. M. Bradley, M. K. Greenwald, M. Petry et P. J. Lang, «Remembering pictures: Pleasure and arousal in memory,» Journal of Experimental Psychology: Learning, Memory, and Cognition, p.

379–390, 1992. - D. Watson et A. Tellegen, «Toward a consensual structure of mood,» Psychological Bulletin, p.

219–235, 1985.